Logic is often taken to describe eternal truths. By being able to isolate properties and functions from the variables they would be commonly subjected to, it designates at least an activity whose contents can be considered independent from mundane contingencies. Yet, both logic and its formalisation have a history. From Aristotle and Euclid to modern logicians such as Georges Boole, Gottlob Frege, Charles Sanders Peirce, Kurt Gödel, and Alan Turing, logic neither meant exactly the same thing nor was it formalised in the same way. Coming from rather different perspectives on this, Catarina Dutilh Novaes and Reviel Netz both underline the interactive and artifactual dimension of logic’s historical construction. We put them in conversation in order to explore what their different perspectives on this history can teach us about logic.

Glass Bead: It is perhaps uncontroversial that logic has a history: its understanding is cultural and historical, and its formalization is conventional. The standard view is that logic arises in ancient Greece, and undergoes a transformation in the 19th century with the introduction of quantification (see Danielle Macbeth’s essay in this issue1). Yet, this also seems to enter in tension with the idea that logic would pertain to truths that are considered universal and ahistorical. While the criterion of necessary truth preservation in classical deduction has the appearance of being valid for all time, both of you have emphasized the concrete historical developments that established this framework. Not only is there a tension between the changing form of logic and the putatively eternal truths that are its content, but this relates to a more complex tension between the external or artifactual elaboration of logic and mind, and the internal capacities of thinking. How can we understand the relation between the contingent historical development of logical formalisms as concrete artifactual technologies and the necessary truths that logic is supposed to grasp?

Catarina Dutilh Novaes: There is indeed a tension between these two ideas, namely that logic as a theory or branch of knowledge must have developed through historically interesting paths, and the idea that the subject matter of logic (if logic has a subject matter at all, which is one of the big debates in the history of the philosophy of logic) are eternal, necessary truths. But there are different ways out of this tension, so to say.

For example, one may maintain that logic does deal with necessary, eternal truths and still be interested in the history of how we come to discover these truths—just as, say, the historian of chemistry is interested in how we gradually came to discover basic facts about the constitution of matter which are nevertheless ahistorical (to some extent at least). But in this case the question is whether such historical analyses will be relevant for understanding logic as such—that is, beyond their historical interest. To pursue the analogy, it is not obvious that the modern chemist has much to learn for her research from the historian of chemistry. (I think the case for this position with respect to logic can still be made, but some extra work is required here.)

Alternatively, adopting a more resolutely ‘human’ perspective, we may be interested in what these presumed eternal truths of logic may mean for human practices, why they matter. Presumably, there are infinitely many human-independent truths about the world that are nonetheless of no real interest to people (e.g. how many grains of sand there are in Ipanema beach at a given point in time). In this case, the historical perspective has something to offer in the sense of explaining why, at a certain point in time, the investigation of the presumed eternal truths of logic became relevant for humans. I take it that both Reviel and I are very much interested in this question (while remaining non-committal on the ontological status of these presumed truths): why is it that at a certain point in time (or most likely different points in time, maybe different places) this became a salient question for humans, so that they deemed it worth their time to investigate it further? Reviel and I share the idea that a major motivation for interest in what we now describe as logic were debating practices in ancient Greece, which in turn were motivated by developments both in politics and in the sciences. (My Aeon piece argues for this in more detail.2) This historicist perspective is still compatible with a realist view of the eternal truths of logic.

Finally, one can simply deny that logic is in the business of describing eternal truths, and that all there is to logic is the development of concepts that respond to various needs that people experience in different situations, but these are concepts that do not latch onto any independent (let alone eternal, necessary) reality. Personally, I prefer to stay agnostic on the ontological status of putative logical truths, especially because, even if they did exist, there is the huge problem of our epistemic access to these facts. (In philosophy of mathematics this is known as the Benacerraf challenge with respect to numbers.) So, either way, I take it that all we really have epistemic access to are the ways in which logic developed overtime in connection with human practices, in the context of human cognitive possibilities. But many philosophers of logic of course disagree with me on this!

Reviel Netz: I have often dabbled myself in this kind of dialectic. It is an intuitively compelling tension to bring up, but it actually kind of crumbles to the touch, as Catarina has already noted. The riddle in fact goes in the other direction. Contrary to the idea that we would have assumed the possibility of objective knowledge, discovered the fact of historicity, and then came to doubt objectivity, we should start by being aware of the fact of historicity and then, once we are equally made aware of the reality that humans have obtained objective knowledge in many diverse fields, this should excite us as a historical and indeed philosophical puzzle. While it is always possible to provide formal epistemological accounts of how knowledge in a rather abstract sense is possible, what such accounts leave unanswered is the question of how, in historical fact, this or that form of knowledge was made possible, at certain times and places. This, for me, is the real task of history for the study of epistemology.

GB: With regard to your work, Catarina, it seems that the question of the artifactual nature of logic first concerns the relation between implicit and explicit rules, or between informal practices and their formalization. Here you notably argue that, even if formal ‘explicitation’ of logical consequence is preceded by a more ‘everyday’ notion that is implicit in common dialogues, it is not intuitively given to thought but rather a theoretical construct that has a certain historical, and presumably artifactual, development. Contrary to Robert Brandom, for instance, you do not think that the principles of logic pervade ordinary discourse, only to be made explicit by formalization, but that they emerge from rather contrived practices of disputation. In order to avoid supposing a kind of pre-theoretical proto-logical form implicit in thinking, it seems that we must recognize the thoroughgoing artifactuality of all (logical) thought. The historical question then would be to understand how, where, and why, such forms developed at all. How can we explain the progression from pre-theoretical pragmatic behavior to the theoretical construction of implicit notions, and from there to explicit formalizations?

CDN: I do think there is a sort of continuum between more ‘mundane’ argumentative practices and the more regimented argumentative practices that then give rise to the notion of logical consequence, understood as tightly related to necessary truth preservation. But the fundamental difference is that ‘regular’ argumentative practices tend to rely on principles of reasoning that are thoroughly defeasible, whereas the notion of logical consequence as understood by philosophers and logicians tends to be indefeasible. The idea is that in most life situations, you may draw inferences on the basis of the information available to you, plus some background assumptions, but these inferences will not be necessarily truth-preserving and monotonic: the available information does not make the conclusion you draw necessary, but rather probable (likely).3 This means that, at a later stage, new information may come in which will make you withdrawn the conclusion you previously drew, and that is absolutely the rational thing to do! Similarly, in most circumstances the information available to you will not allow for necessary conclusions to be drawn, so your choice is between not drawing any conclusion at all, or drawing the defeasible conclusions that seem likely to you at that point.

In other words, a defeasible notion of consequence only requires you to look at the most plausible models of the premises you have, and see what holds in them; an indefeasible notion of consequence requires you to look at all the models of your premises, not only the more ‘normal’ ones. This way of conceptualizing the difference was introduced by Yoav Shoham, a computer scientist, under the name of preferential logics (which are a family of non-monotonic logics). From this perspective, what needs explaining is, under which circumstances does it become relevant and desirable to take into account all situations in which the premises are true, not only the more normal ones? In most real-life contexts, looking at all situations is overkill. So, one way this can be explained is that, in certain debating contexts, arguments that are necessarily truth-preserving are advantageous for the one proposing them, because they are indefeasible: no matter what new information the opponent might bring in, they will not be defeated. (This corresponds to the idea that a deductive argument represents a winning strategy for the person defending it.) This is also how I read Reviel’s story on the emergence of deductive argumentation in ancient Greece; a peculiar set of circumstances gave rise to interest in these arguments that are more ‘demanding’ than defeasible arguments.

Once these contrived debating practices are in place, they can be further regimented and turned into what we usually call logical systems. The syllogistic system as presented in the Prior Analytics by Aristotle can be viewed as one of the first occurrences of such a regimentation (see my paper “The Formal and the Formalized”4).

GB: Reviel, in your book The Shaping of Deduction (1999), you emphasized the crucial role that drawing diagrams, understood as scripto-visual artefacts tapping human cognitive resources through visualization, played in the emergence of a new cognitive ability—namely, the construction of chains of deduction. The difference you seem to posit between these diagrams and other forms of communication such as natural language, points to what may be described as an engine of universality, interlinking highly local/specific practical particularities (specialized techniques in Ancient Greece) and the abstract, global horizon implied by deduction (what you call the shaping of generality). In the history of logic, how did the diagram foster the cognitive ability to navigate these different scales?

RN: I do not have a model where the diagram alone is explanatory. The Greek combination of diagram and formulaic language provides the cognitive tools with which the specific task of producing a Greek-style proof may be obtained. The typical tasks studied by mathematics have shifted historically, and there were also various shifts in media and contexts of use, which allowed the rise of other cognitive tools, of which the most important is visual, ‘algebraic’ symbolism, which in a sense units the diagram and formulaic language; it is a fantastic tool! It has to be emphasized that we do not have direct access to ancient Greek diagrams; virtually all evidence stems from Medieval manuscripts. This is one reason why past scholars tended to avoid even the question, what were Greek diagrams like. But of course our evidence for antiquity always has to be pieced together indirectly, and I think the same can be done for Greek diagrams. What seems to emerge then is that Greek diagrams were more symbolic and less iconic than we tended to assume for geometrical diagrams. They are ambiguous between icon and symbol. They are not intended to be a picture, through which you can see the object; they are rather understood as a representation of the geometrical relations, through which you can think about the object. This is another reason why they were not studied by past scholars: because the modern assumption that the language/diagrams divide corresponds with a symbol/icon divide made Greek diagrams opaque to modern scholarship. Greek diagrams clarify the identity of the protagonists (these are the points and lines) and their relations (here they intersect, here they contain), but they are not drawn to scale; they use simplified schematic representations (triangles are typically isosceles, for instance, regardless of which triangle is supposed to be discussed), and they might even represent straight lines by curved, or vice versa, if this helps with the resolution of the picture and so the clarity of the relations. They are thus somewhat ‘topological.’ All this serves the geometrical argument, as one can rely on diagrams, precisely to the extent that one reads in them this schematic, topological information.

Now, as you can see, the shape of Greek geometrical diagrams can be explained functionally: it came out of a need to use diagrams as part of the geometrical reasoning, for which purpose it is better to think of them as symbols more than icons. They did not necessarily emerge in their particular form for this reason. A certain schematic minimalism is simply typical of Greek writing as such; it is the writing of a culture based on performance, where writing is but a secondary tool for the production of performative experiences. And so, their diagrams are schematic, just as their sentences are ‘schematic’—lacking, for instance, punctuation or even spacing between words. The topological character of Greek diagrams is an unintended consequence of this minimalism. Another consequence is a certain ontological parsimony. In one sense, the diagram is the object of the discourse. It returns throughout to objects such as “the triangle ABC”—which means the triangle next to whose vertices one can find the letters A, B, C. So, at some level, you discuss a concrete object, right there on the papyrus. But then again, because of the ambiguity between icon and symbol, it never gets clarified quite what this means. Are you talking about some ideal triangle, for which the written diagrams serve as a symbol? Or is it about a look-alike for the figure on the page? This translates into a deeper ontological ambiguity, concerning the nature—physical, or purely geometrical—of the objects studied by Greek geometry.

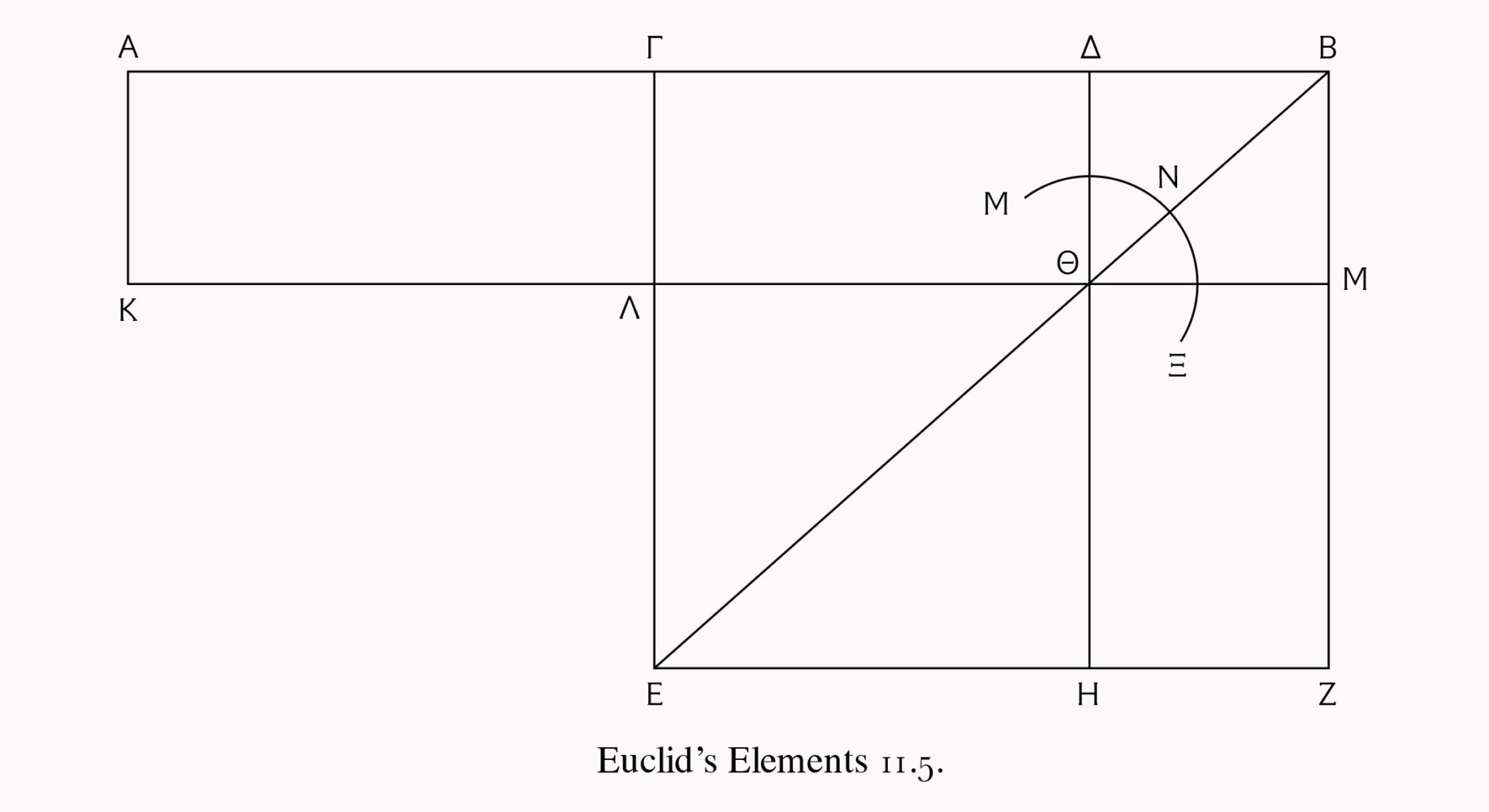

Euclidean lettered diagram redrawn by Reviel Netz, in his book The Shaping of Deduction in Greek Mathematics: A Study in Cognitive History, Cambridge: Cambridge University Press, 1999.

GB: In relation to this, you argue that ancient Greek mathematicians were not so much interested in the ontological status of logic, but rather only in the stabilization and internal coherence of a formal mathematical language. You write that the diagram acted for them as a substitute for ontology; that is, as a means through which they could seal mathematics off from philosophy. For you, the function of the lettered diagram is to ‘make-believe,’ something that stands between pure literality and pure metaphor. Yet, to make-believe here, is not just performative; it relies on a complex articulation between what you call the shaping of necessity and what you call the shaping of generality. Could you explain this relation between the relative single-mindedness that seems to have determined the way in which ancient Greek mathematicians were able to retreat from any ontological argument, and the way in which the objective truth procedures that they invented and stabilized also had implications that exceeded mathematics?

RN: As a matter of historical, sociological reality, Greek mathematics was shaped with relatively little debt to specific philosophical ideas. Its key practices therefore tend, indeed, to elide specific philosophical questions. How Greek mathematicians ended up this way is a complicated question, but very quickly I can say that they perhaps wished to insulate themselves from a field characterized by radical debate, not because they wished to avoid debate as such but because they wanted to carve out a field of debate of their own professional identity. Then, the rich semiotic tools of Greek mathematics—language and diagram—allow a certain room for ambiguity concerning the nature of reference, and this is something Greek mathematicians seem to embrace, and I agree with your formulations there. In general, the attitude is one of make-believe. You ask that a circle may be drawn and, with a free-hand shape in place, you proceed as if it were the circle you wished to have there. You mentally enter a universe where everything that can be proved to be doable in principle is, indeed, done in practice, once you wish for it. Indeed, this remains the attitude of mathematics even today, and so we may get too accustomed and desensitized to this attitude. But mathematics is essentially a make-believe game, a practice emerging with the Greek geometrical diagrams: taking an imperfect sign as if it were perfect.

GB: Catarina, in your book on formal languages5 you understand formalisms as cognitive artifacts that, like the deductive method, allow for a de-biasing effect that counters the pervasive cognitive tendency towards what you call “doxastic conservativeness” (i.e. attachment to prior beliefs). This seems to have important consequences for art. Firstly, at least since modernity, art has been very much engaged with overcoming entrenched beliefs and generating novel conventions, moreover formalized procedures have been integral to this turn. Secondly, it could be argued that (at least non-representative) art is constitutively concerned with what you call de-semantification. It seems that, in this sense, much art could be considered as a kind of operative writing (or gesture), as that it is literally a form of thinking that has been externalized onto a particular medium. In the pluralistic conception of human rationality you put forward, how do you view artistic practice within the context of the need to counter doxastic conservativeness?

CDN: Although I have never thought much about the connections between my thoughts on de-semantification and art, I suppose one general connection is that, to some extent, the idea of breaking away from established patterns of thinking by means of cognitive artifacts such as notations is a search for creativity and innovation, in the sense of attaining novel ideas and beliefs. (Yet, of course, my emphasis on mechanized reasoning might in fact suggest the exact opposite!) Insofar as art is also tightly connected with novelty and creativity, then there might be interesting connections here worth exploring. But as well described by Sybille Krämer, from whom I take the notion of de-semantification,6 notations also allow for a more ‘democratic’ approach to cognitive activity, in the sense that insight and ingenuity is required to a lesser extent. Instead, perhaps in this case the user of formalisms might be compared to the skilled artisan, who can execute beautiful objects by deploying the techniques they are good at, without necessarily seeking innovation. But again, this is not something I have given much thought to until now, so these are just some incipient remarks. Definitely something to think about in the future.

Incidentally, I have however been working on the question of why mathematicians often talk about mathematical proofs using aesthetic vocabulary, and here some of Reviel’s ideas are very interesting. He has this paper7 where he uses concepts from literary theory like poetry to analyze mathematical proofs, understood as written discourse that is relevantly similar to poems so as to allow for a literary analysis of mathematical proofs also in their aesthetic components. Classical poetry and mathematical proofs have in common the fact that they are forms of discourse constrained by fairly rigid rules, and beauty occurs when creativity and novelty emerge despite, or perhaps because of, these constraints. But maybe Reviel should talk about this, not me.

RN: I would have indeed at least one quick observation to make here. In the paper you mention, I may have in fact over-emphasized the idea of formalism. There’s basically no real historical practice of mathematics which is truly formalist. Mathematicians, in historical reality, are almost always engaged with theories that they understand semantically. Because after all this is how you understand and you must operate through your understanding. And the same is true in art. Artists, in historical practice, are people, they tell stories. There are those few aberrant moments in high modernism where this seems to have been avoided but even tonal music is not very far from narrative forms and is in fact embedded, historically, in various forms of song and opera.

GB: The idea that logic has a prescriptive, or normative, import for reasoning—that is, a logically valid argument should compel an agent to act in accordance with the moral law that can be deduced from it—has been heavily criticized within philosophy, and is mostly vehemently rejected within the humanities. Catarina, you agree that the notion of necessary truth preservation that is embedded in many logical systems, in particular the classical framework, is not descriptively valid for reasoning in most everyday situations, and further concede that there are good arguments against considering logic as prescriptive for thought. However, you argue that a historically informed reconceptualization (or rational reconstruction) of the deductive method according to a multi-agent dialogical framework shows that the normativity of logic may be upheld but with significant modifications to the classical image. The normative import of logic for reasoning that results seem to imply a potentially different take on the problem of social relativism. Could you expand on this? The same question could also be asked to you Reviel, notably in relation to the difference you seem to make in the introduction of your book with what Simon Schaeffer and Steven Shappin have argued in their seminal book, The Leviathan and the Air-pump (1985).

CDN: In a weak sense, I am a social constructivist in that I am interested in how deductive practices emerged and developed in specific social contexts. But I do not think that this entails rampant relativism of the kind that is often (correctly or not) associated with the strong program of social constructivism. One way to see this is to think of Carnap’s principle of tolerance with respect to logic. Prima facie, he seems to be saying that any set of principles that satisfy very minimal conditions (maybe consistency, or in any case non-triviality) counts as a legitimate logical system. But he also emphasizes that, ultimately, what will decide on the value of a logical system is its applicability. (The pragmatic focus is made clearer in his concept of explication, which I wrote a paper on with Erich Reck.)8 In turn, what makes it so that some systems will be more useful than others probably depends on a lot of factors: facts about human cognition, facts about the relevant physical reality, facts about social institutions, etc. But it is not the case that any logical system is as good as any other (for specific applications).

As it so happens, deduction is a rather successful way of arguing in certain circumstances, and indeed the Euclidean model of mathematical proofs remained extremely pervasive for millennia. (It was only in the modern period that other, more algebraic modes of arguing in mathematics were developed.) You can still ask yourself what makes deduction so successful and popular for certain applications, despite (or perhaps because) being a cognitive oddity. A lot of my work has focused precisely on addressing this question. But my strategy is usually to look for facts about human practices, and how humans deal with the world and with each other, to address these questions, rather than a top-down, Kantian transcendental approach that seeks to ground the normativity of logic outside of human practices. By the way, just to be clear, I am equally interested in the biologically determined cognitive endowment of humans, presumably shared by all members of the species, and the cultural variations arising in different situations. It is in the essence of human cognition to be extremely plastic, and so within constraints, there is a lot of room for variation.

RN: In fact, I do not think I differ that much from Shaffer and Shapin. I find what they write compelling (and I think they do not disagree too much with me). Yet, it is true that I am, at the end of the day, simply bored by relativism as such. The starting point for my study are the realities: the reality of truth, the reality of history. What I think happens is that many people in the humanities today, who are interested in the reality of history, are often less interested in the reality of truth (but I do not think this can really be imputed to Shaffer and Shapin). We can analyze the contingent paths that lead there, but that is not the key point right now. The main observation I would add is that if you are less interested in the reality of truth—“Hey, Science and Logic Absolutely Rock! Isn’t this Incredible!”—there are certain question you tend to ignore, notably: what-makes-it-possible-for-science-and-logic-to-absolutely-rock. I simply think we should put more effort into this kind of question.

GB: You have both stressed, although in different ways, the differences between everyday forms of communication and the debiasing and generalizing effects afforded by formal languages. In the contemporary context, where artificial intelligence is developing apace, it seems that not only will many intuitive concepts and expert opinions be replaced with automated procedures such as statistical prediction rules, but also the criterion of fruitfulness will for many issues be redefined by AI in ways that we can hardly imagine. In Hegelian terms, Carnapian explication might be seen as historical progression via determinate negation, but here it is an alien intelligence that is tarrying with the negative. How do you think AI will alter the practical and conceptual terrain, and how can we guard against the political inequities of a scientistic subsumption of fruitfulness under exactness?

CDN: I take it that the so-called strong AI program has proven to be unsuccessful, so as a general principle, it seems to me that the most interesting way to move forward with AI is to look for ways in which artificial devices will complement rather than mimic human intelligence. This is very much in the spirit of the extended cognition approach that I developed in my 2012 monograph.9

There has always been anxiety about the impact of new technologies on human lives—recall Socrates’ mistrust of the written language! And yet, it is in the very nature of humans to be “natural born cyborgs,” as well described by Andy Clark, constantly in search of new technological developments that significantly impact our lives. It is usually very difficult if not impossible to predict the exact impact that a new technology will have; the thing with technologies is precisely that they are typically developed with certain applications in view, but then often end up having applications that no one could have foreseen. It is probably true that the technology of the digital computer will in the long run have tremendous impact, as is already noticeable, perhaps on a par with the emergence of agriculture, writing, steam engines, the press. What is not true is that this is the very first time that a new, earth-shattering technology emerges which will completely change the way humans live their lives; it is major, but not unique. As for the political dimension you ask about, here again I think there is nothing intrinsic to any specific technology that will necessarily lead to either inequities or equities; it is all about how it will be used. On the one hand, a focus on automated procedures may free up space and time in human lives to focus on other endeavors once some necessary but tedious ones are taken over by machines (I love dishwashers!), which can positively affect everyone. On the other hand, naturally the mastery of a technology confers power to the ones who master it, which may give rise to asymmetries in power relations. My only point is that this is not new to the latest technology of the digital computer and developments in AI. But time will tell, it may be too early to know at this point. (If anything, the real game changer may have been the development of engines operating on fossil fuel, which may well lead to the destruction of the Earth as we know it, and of human life in the medium run.)